Solving Models

Contents

- 1 How to pick the solver

- 2 Transformers

- 3 Simulator

- 4 Numerical Solvers

- 4.1 Creating a New Numerical Solver

- 4.2 Choosing the Appropriate Numerical Solver

- 4.3 Detailed Descriptions of Supported Numerical Solvers

- 4.3.1 Common options among all analytic solvers

- 4.3.2 Direct steady-state solver

- 4.3.3 Iterative steady-state solver

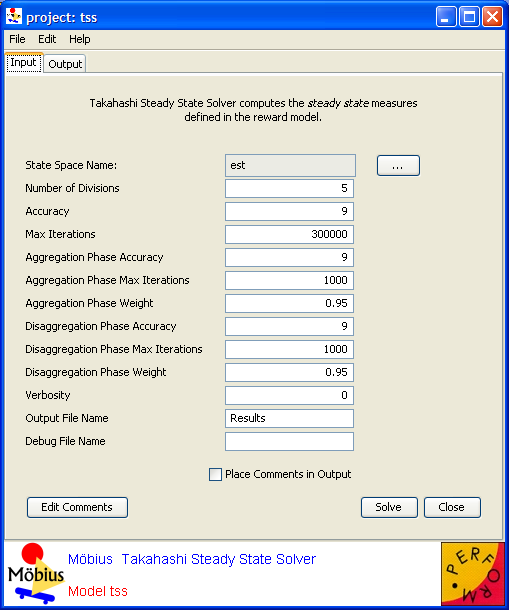

- 4.3.4 Takahashi steady-state solver

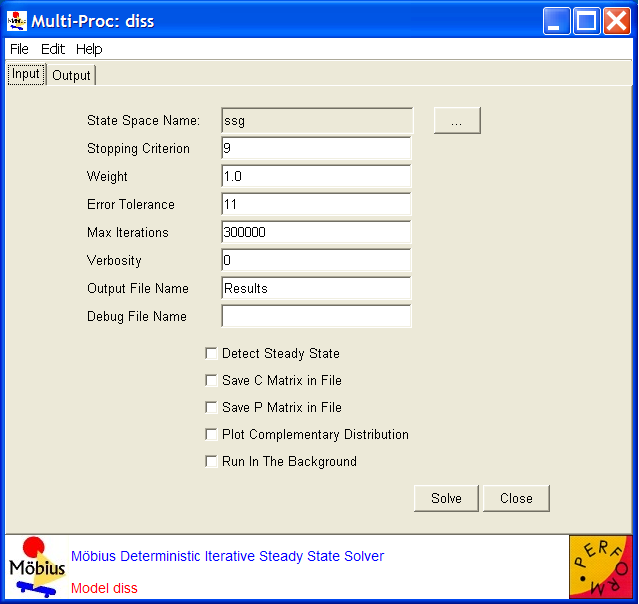

- 4.3.5 Deterministic iterative steady-state solver

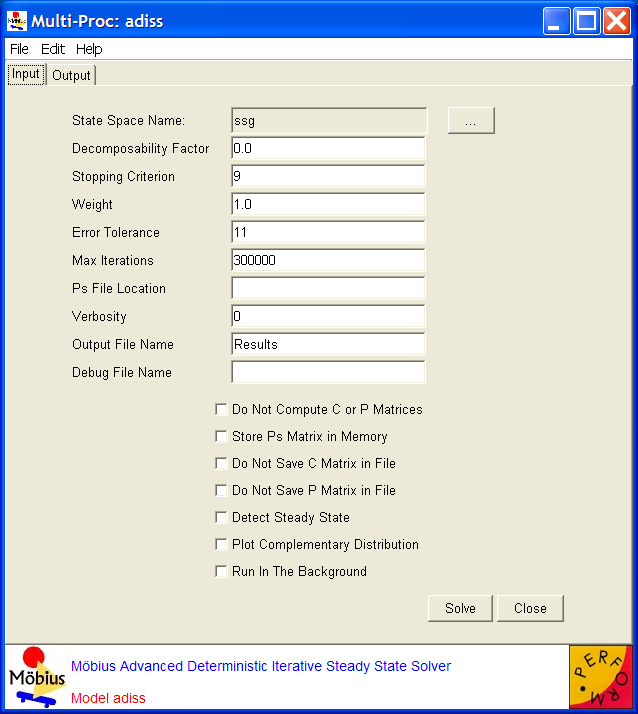

- 4.3.6 Advanced deterministic iterative steady-state solver

- 4.3.7 Transient solver

- 4.3.8 Accumulated reward solver

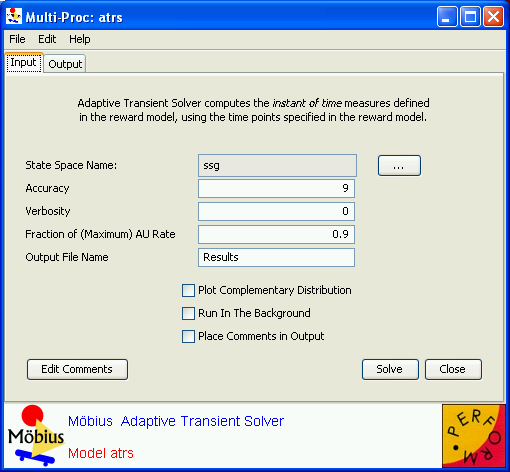

- 4.3.9 Adaptive transient solver

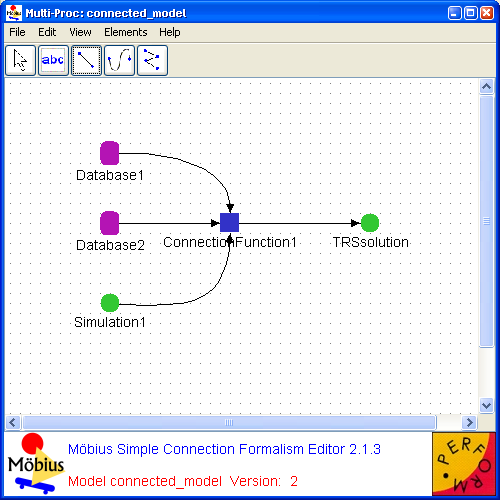

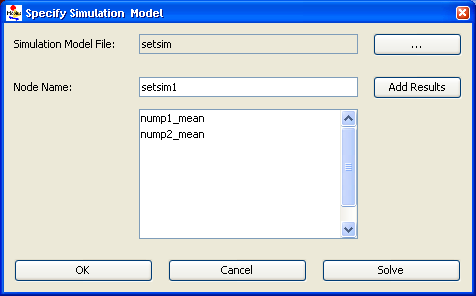

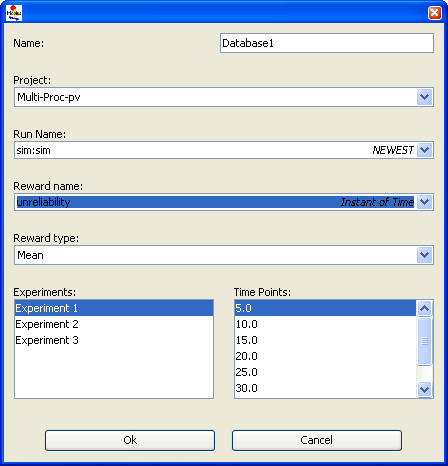

- 5 Connected Model Solver

- 6 References

How to pick the solver

Möbius provides two types of solvers for obtaining solutions on measures of interest: simulation and numerical solvers. The choice of which type of solvers to use depends on a number of factors. More details on these factors are provided in the sections on simulation (Section 3) and numerical solvers (Section 4).

In general, the simulation solver can be used to solve all models that were built in Möbius, whereas numerical solvers can be used on only those modes that have only exponentially and deterministically distributed actions. In addition, simulation may be used on models that have arbitrarily large state-space descriptions, whereas numerical solvers are limited to models that have finite, small state-space description (that may be held in core memory). Furthermore, simulation may be more useful than numerical solvers for stiff models.

On the other hand, all numerical solvers in Möbius are capable of providing exact solutions (up to machine precision), whereas simulation provides statistically accurate solutions within some user-specifiable confidence interval. The desired accuracy of the computed solutions can be increased without excessive increase in computation time for most numerical solvers, while an increase in accuracy may be quite expensive for simulation. Additionally, full distributions may be computed for results from the numerical solvers, but usually not for results from simulation. Furthermore, for models in which numerical solvers are applicable, detection of rare events incurs no extra costs and requires no special techniques, whereas such computation by simulation is extremely expensive and uses the statistical technique of importance sampling.

Transformers

Introduction

Some of the solution techniques within Möbius, such as the simulator, operate directly on the model representation defined using the Atomic and Composed editors described in earlier chapters of the manual. These solvers operator on the model using the Möbius model-level abstract functional interface. There are other solution techniques, specifically the numerical solvers described in the next chapter, which require a different representation of the model as an input. Instead of operating on the high-level model description, numerical solution techniques use a lower-level, state space representation, namely the Markov chain.

Flat State Space Generator

The flat state space generator1 is used to generate the state space of the discrete-state stochastic process inherent in a model. The state space consists of the set of all states and the transitions between them. Once the state space has been generated, an appropriate analytical solver can be chosen to solve the model, as explained in Section 4.

- 1 This was the only state space generator (SSG) available prior to version 1.6.0 and it was simply called the state space generator. From that version on, it is called the flat state space generator.

While simulation can be applied to models with any underlying stochastic process, numerical solution requires that the model satisfy one of the following properties:

- All timed actions are exponentially distributed (Markov processes).

- All timed actions are deterministic or exponentially distributed, with at most one deterministic action enabled at any time. Furthermore, the firing delay of the deterministic actions may not be state-dependent.

The only restrictions on the use of instantaneous (zero-timed) actions are that the model must begin in a stable state, and the model must be stabilizing and well-specified [1]. It should also be noted that the reactivation predicates (see Section 4.2.1 of Building Models) must preserve the Markov property. In other words, the timed actions in the model must be reactivated so that the firing time distributions depend only on the current state, and not on any past state. That rule pertains only to timed actions with firing delays that are state-dependent.

The flat state space generator consists of a window with two tabs, SSG Info and SSG Output, which are discussed below.

Parameters

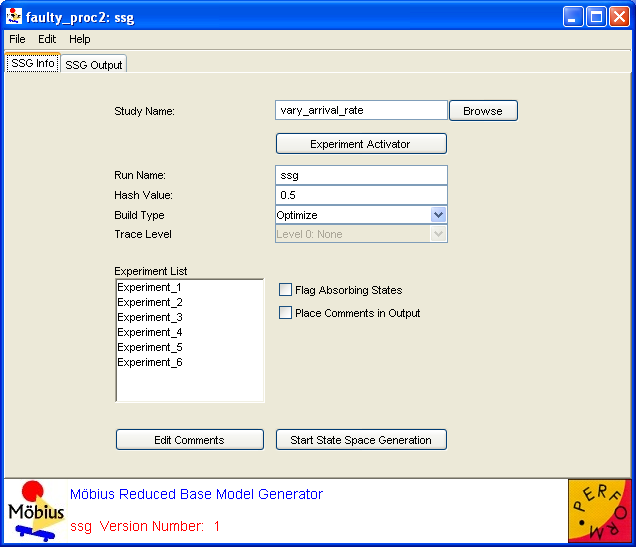

The SSG Info tab (<xr id="fig:ssg_info" />) is presented when you open the interface, and allows you to specify options and edit input to the state space generator.

<figure id="fig:ssg_info">

- The Study Name text box specifies the name of the child study. This box will show the name of the study you selected to be the child when you created the new solver. You can change the child study by typing a new name in the box or clicking the Browse button and selecting the solver child from the list of available studies.

- The Experiment List box displays the list of active experiments in the study, and will be updated upon any changes made through the Experiment Activator button.

- The Experiment Activator button can be used to activate or deactivate experiments defined in the child study, and provides the same functionality as the study editor Experiment Activator described in Section 7.3 of Building Models.

- The Run Name text box specifies the name for a particular run of the state space generator. This field is used to name trace files and output files (see below), and defaults to “Results” when a new solver is created. If no run name is given, the solver name will be used.

- The Build Type pull-down menu is used to choose whether the generator should be run in Optimize or Normal mode. In normal mode, the model is compiled without compiler optimizations, and you can specify various levels of output by changing the trace level, as explained below. This mode is useful for testing or debugging a model, as a text file named

Run Name

Run Name _Exp

_Exp x

x _trace.txt will be created for each experiment and contain a trace of the generation. In optimize mode, the model is compiled with maximum compiler optimizations, and all trace output is disabled for maximum performance. Running the generator will write over any data from a previous run of the same name, so change the Run Name field if you wish to save output/traces from an earlier run.

_trace.txt will be created for each experiment and contain a trace of the generation. In optimize mode, the model is compiled with maximum compiler optimizations, and all trace output is disabled for maximum performance. Running the generator will write over any data from a previous run of the same name, so change the Run Name field if you wish to save output/traces from an earlier run.

- The Build Type pull-down menu is used to choose whether the generator should be run in Optimize or Normal mode. In normal mode, the model is compiled without compiler optimizations, and you can specify various levels of output by changing the trace level, as explained below. This mode is useful for testing or debugging a model, as a text file named

- The Trace Level pull-down menu is enabled only when Normal mode is selected as the Build Type. This feature is useful for debugging purposes, as it allows you to select the amount of detail the generator will produce in the trace. The five options for Trace Level are:

- Level 0: None No detail in the trace. This would produce the same output as if optimize mode were selected.

- Level 1: Action Name For each new state discovered, prints the names of the actions enabled in this state. These are the actions that are fired when all possible next states from the current state are being explored.

- Level 2: Level 1 + State The same as Level 1, with the addition that the current state is printed along with the actions that are enabled in the state.

- Level 3 has not yet been implemented in Möbius.

- Level 4: All The same as Level 2, with the addition that the next states reachable from each state currently being explored are also printed.

- While a higher trace level will produce more detail in the generator trace and aid in debugging a model, it comes at the cost of slower execution time and larger file size.

- The Hash Value text box is used by the hash table data structure, which stores the set of discovered states in the generation algorithm. This number specifies the point at which the hash table is resized. Specifically, it is the ratio of the number of states discovered to the size of the hash table when a resizing2 occurs. The default value of

, which means that the hash table is resized when it becomes half full, should suffice for most purposes.

, which means that the hash table is resized when it becomes half full, should suffice for most purposes.

- The Hash Value text box is used by the hash table data structure, which stores the set of discovered states in the generation algorithm. This number specifies the point at which the hash table is resized. Specifically, it is the ratio of the number of states discovered to the size of the hash table when a resizing2 occurs. The default value of

- The Flag Absorbing States checkbox, when selected, will print an alert when an absorbing state is encountered. An absorbing state is one for which there are no next (successor) states.

- The Place Comments in Output checkbox, when selected, will put the user-entered comments in the generator output/trace. If the box is not checked, no comments will appear, regardless of whether any have been entered.

- The Edit Comments button allows you to enter your own comments for a run of the generator. Clicking this button brings up the window shown in <xr id="fig:ssg_commentbox" />. Type any helpful comments in the box and hit OK to save or Cancel to discard the comments. The Clear button will clear all the text from the comment box.

<figure id="fig:ssg_commentbox">

- The Place Comments in Output checkbox, when selected, will put the user-entered comments in the generator output/trace. If the box is not checked, no comments will appear, regardless of whether any have been entered.

- The Experiment List box displays the list of active experiments in the study, and will be updated upon any changes made through the Experiment Activator button.

2 A resizing rehashes and roughly doubles the size of the hash table.

Generation

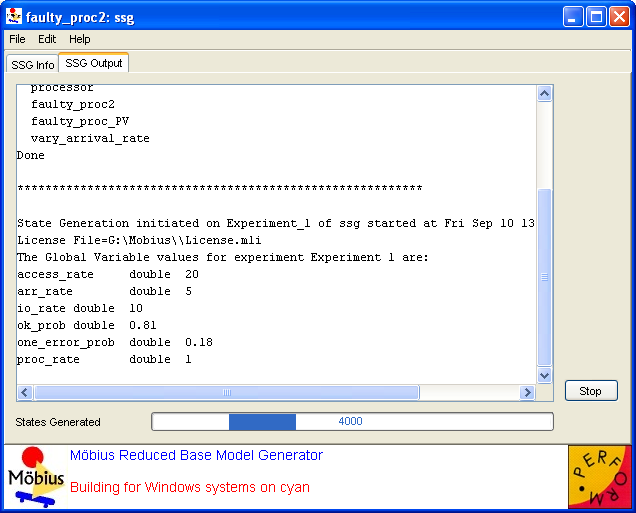

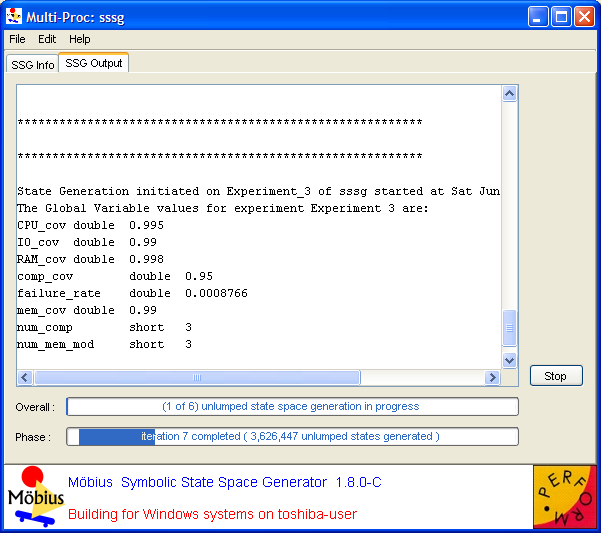

Clicking the Start State Space Generation button switches the view to the SSG Output tab and begins the process of generating the state space. The output window appears in <xr id="fig:ssg_output" />.

<figure id="fig:ssg_output">

First the models3 are compiled and the generator is built. For each active experiment, the values of the global variables are printed; if normal mode is being used, information about the trace level and hash table is also displayed. Then the state space is generated for the experiment. While it is being generated, the States Generated box and progress bar at the bottom of the dialog indicate the number of states that have been discovered. This number is updated once for every 1,000 states generated and when generation is complete. At any time, you may use the Stop button to terminate the run. A text file named  Run Name

Run Name _output.txt will be created. It will mirror the data output in the GUI.

_output.txt will be created. It will mirror the data output in the GUI.

- 3 Here models refers to the atomic/composed models, performance variable, and study.

A final remark is in order about the notion of state during generation of the state space of the stochastic process. In Möbius, a state is defined as the values of the state variables along with the impulse reward (see Section 6.1.1 of Building Models) associated with the action whose firing led to that state. Therefore, for a model on which impulse rewards have been defined, if two different actions may fire in a given state and lead to the same next state, they give rise to two unique states in the state space. The reason is that when impulse rewards are defined, the state space generator must store information about the last action fired. The result is a larger state space for models on which impulse rewards have been defined. It is important to realize this when analyzing the size of the generated state space.

Command line options

The flat state space generator is usually run from the GUI. However, since the models are compiled and linked with the generator libraries to form an executable, the generator can also be run from the command line (e.g., from a system shell/terminal window). The executable is found in the directory

-

Möbius Project Root

Möbius Project Root /

/ Project Name

Project Name /Solver/

/Solver/ Solver Name

Solver Name /

/

-

and is named  Solver Name

Solver Name Gen_

Gen_ Arch

Arch [_debug], where Arch is the system architecture (Windows, Linux, or Solaris) and _debug is appended if you build the flat state space generator in normal mode. The command line options and their arguments are as follows:

[_debug], where Arch is the system architecture (Windows, Linux, or Solaris) and _debug is appended if you build the flat state space generator in normal mode. The command line options and their arguments are as follows:

-P experimentfilepath

- Place the created experiment files in the directory specified by experimentfilepath. This argument can be a relative path. By default, the experiment files will be placed in the current directory.

-N experimentname

- Use experimentname to name the experiment files created. These files will have extensions .arm or .var, and will be placed in the directory specified by the “-P” option.

-B experimentnumber

- Generate the state space for the experiment numbered experimentnumber, with the first experiment having number 0. For example, use “-B1” to run the second experiment (e.g., Experiment_2). Defaults to 0.

-h hash_value

- Use hash_value as the hash value described above. Defaults to 0.5.

-a

- Flag absorbing states.

-F filetype[1-6]

- Use filetype as the format for the experiment file. The only filetype currently supported in the flat state space generator is “-F1,” or row ASCII format, which is also the default option. Other file formats may be available in future versions.

-e, -s db_entity, db_macserver

- These are internal options for interaction with the database. You can safely ignore them.

-l trace_level[0-4]

- Use trace_level as the trace level described above. Only applies when you are running the debug executable.

-t tracefilename

- Create a trace file named tracefilename as output. If this option is not specified, output is directed to the standard output stream.

Example: ssgGen_Windows_debug -P. -B0 -l4 -ttrace.txt

-

Solver Name

Solver Name is ssg

is ssg

-

-

Arch

Arch is Windows

is Windows

-

This command will generate the state space for the first experiment (Experiment_1) with trace level 4, place the experiment files in the current directory, and create a trace file named trace.txt.

Symbolic State Space Generator

The symbolic state space generator (symbolic SSG) is a component that was added to the Möbius tool for version 1.6.0. In this chapter, we first introduce this component, discuss its advantages and limitations, and describe how to use it in an efficient way. Then, we will explain how to fill up each of the input parameters of its corresponding GUI editor. The different phases of the state space generation will be briefly described. Finally, we will provide instructions on how to run the symbolic SSG from the command line. For more details on the inner workings of the symbolic SSG, please see [2] [3].

Introduction

In solving a model using numerical solution, a modeler must first run a state space generator on the model to build a representation of the stochastic process underlying the model, and then use a numerical solver to compute the measures (s)he is interested in.

A numerical solution algorithm needs data structures to represent two essential entities in the memory: the stochastic process (input) and one or more probability distribution vectors (output). The stochastic process is generated by a state space generator. In this chapter, we only consider stochastic processes that are continuous time Markov chains (CTMCs), and can therefore be represented by state transition rate matrices whose entries are the rates of exponentially distributed timed transitions from one state to another.

In many numerical solvers, sparse matrix representation and arrays are respectively used to represent the process and the probability vector(s). In such a design of data structures, the stochastic process represented by a sparse matrix almost always takes more memory space than the probability vectors.

A problem arises when the model under study is so large that the memory requirement of the two data structures exceeds the amount of memory available on a computer. For example, to analyze a model with ten million states for a specific point of time using the transient solver (TRS) and the data structures mentioned above, at least  bytes

bytes  MB to

MB to  bytes

bytes  MB (depending on the model) of RAM are needed, assuming that each state leads to 10 to 20 other (successor) states and each floating-point number requires 8 bytes of storage.

MB (depending on the model) of RAM are needed, assuming that each state leads to 10 to 20 other (successor) states and each floating-point number requires 8 bytes of storage.

That problem has stimulated a lot of research looking for more “practical” data structures, i.e., more compact (with smaller space requirements) data structures that do not incur too much time overhead. The most practical data structure that virtually all numerical solvers (including ones in Möbius) use to represent probability distribution vectors is simply an array. Efforts by researchers to design better ones have failed so far.

However, there has been a lot of successful research on design of data structures for the representation of the state transition matrix. In particular, symbolic data structures (hence the name symbolic SSG) are widely used nowadays to store state transition matrices in a very compact manner. As opposed to sparse matrix representation, symbolic data structures do not have space requirements proportional to the number of nonzero entries. In virtually all cases, they take up orders of magnitude less space than their sparse representation counterparts. This extremely low space requirement enables the modeler to numerically solve models that are much larger than was previously possible. For example, to do the same analysis we mentioned above, we only need about 100 MB of RAM using symbolic data structures. Because of the concept behind symbolic data structures and the way they are constructed, we see their benefits only in composed models, especially those consisting of atomic models of appropriate sizes; more information on this will be provided later in the section.

The major price a modeler has to pay is overhead in speed. Based on our measurements, when the symbolic SSG is used to generate the state space, numerical solution is expected to be about 3 to 10 times slower than it would be for the flat SSG. Notice that slowdown is not related to the state space generation itself. In fact, in virtually all cases, the symbolic SSG is faster than the flat SSG; in some cases it may be several times faster.

The symbolic SSG utilizes three types of symbolic data structures to represent the required entities. It uses MDDs (Multi-valued Decision Diagrams) for the set of reachable states, MxDs (Matrix Diagrams) for the state transition rate matrices, and MTMDDs (Multi-Terminal MDDs) for the values of the performance variables in each reachable state.

The symbolic SSG utilizes two types of lumping algorithm. Lumping is a technique in which states of a CTMC that are behaviorally “equivalent” are lumped together and replaced by a single state. Therefore, the number of states of a “lumped” CTMC is always equal to or smaller than the number of states of the original CTMC. The performance variables computed from a lumped CTMC are exactly the same as the performance variables computed from the original one. That means that we save on both time and space if we solve a smaller lumped CTMC rather than the original one. For example, models that consist of a number of equal parts or components have a potential for lumping that reduces the size of the state space significantly.

The first lumping technique is called model-level lumping, in which the symmetry of a Replicate/Join model is exploited as explained in Section 5.1.1 of Building Models to reduce the size of the state space. That lumping technique has been part of the symbolic SSG from the beginning.

The second lumping technique that has been added to symbolic SSG in version 1.8.0 is called compositional lumping. In compositional lumping, the lumping algorithm is applied to individual components (atomic models in the Möbius context) of a compositional model, whereas in state-level lumping the algorithm is applied to the overall CTMC generated from the model. In fact, that is the main advantage of the compositional lumping technique over state-level lumping; it is applied on CTMCs of components that are much smaller than the CTMCs of the whole model. That advantage means that, in general, compositional lumping algorithms require less time and memory space for their operation. Möbius supports ordinary compositional lumping. See [3] for more information.

To summarize, the symbolic SSG uses compact data structures to represent the state transition rate matrix of Markov chains such that it devotes most of the available RAM to the storage of the probability distribution vector(s). If possible, it uses model-level and compositional lumping algorithms to reduce the size of the state space of the CTMC that needs to be solved in order to compute the desired performance variables. That makes it possible for the modeler to numerically analyze models that are orders of magnitude larger than was previously possible using flat SSG and sparse matrix representation.

Limitations

As we mentioned above, speed is the major price we pay in using symbolic data structures. Moreover, there are a number of conditions that have to be satisfied before we can use the symbolic SSG:

- The stochastic process underlying a model has to be Markov. Therefore, only exponential and immediate (i.e., zero-timed) transitions are allowed.

- If the model under study is a composed one, immediate transitions must not affect or be affected by shared state variables.

- The reward model must not have impulse reward definitions.

- The starting state has to be stable (not vanishing), i.e., no immediate transition should be enabled in the starting state.

If any of the above conditions is not met for a model, the symbolic SSG is not usable, and its GUI shows a message indicating the unmet condition(s).

Finally, when using the iterative steady state solver, it is not possible to use the SOR (Successive Over Relaxation) variant with state spaces that are generated using symbolic SSG. Instead, the Jacobi variant should be used. The reason is that the symbolic data structure constructed by the SSG does not provide the elements of the state transition matrix in any specific order (see Section 4.3.3).

Tips on efficient use

The time and space efficiency of the symbolic SSG can vary widely depending on the way the model is built. We have observed up to one order of magnitude of speed difference among a number of composed models that result in the same underlying Markov chain. Theoretically speaking, even larger differences are possible. Here are two guidelines that will help modelers construct their models in a way that increases the performance of the symbolic SSG:

- Do not use symbolic SSG for atomic models, especially large atomic models. Not only does it not give any better performance, it drastically reduces both time and space efficiency of state space generation and numerical analysis. For those types of models, simply use the flat state space generator.

- Try to decompose the complete model into atomic models such that each of them has roughly 3 to 50 states. In the general case, it is impossible to estimate how many states an atomic model has. However, the modeler can sometimes make an estimation. Above 50 states, the larger the atomic models’ state spaces are, the more the state space generation performance will be negatively affected.

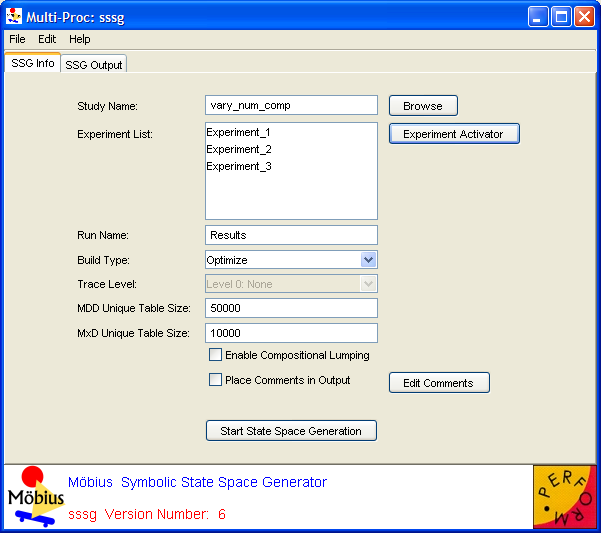

Parameters

The SSG Info tab (<xr id="fig:symssg_info" />) is presented when you open the interface, and allows you to specify options and edit input to the symbolic state space generator.

<figure id="fig:symssg_info">

- The Study Name text box specifies the name of the child study. This box will show the name of the study you identified as the child when you created the symbolic SSG. You can change the child study by typing a new name in the box or clicking the Browse button and selecting the solver child from the list of available studies.

- The Experiment List box displays the list of active experiments in the study, and will be updated in response to any changes made through the Experiment Activator button.

- The Experiment Activator button can be used to activate or deactivate experiments defined in the child study, and provides the same functionality as the study editor Experiment Activator described in Section 7.3 of Building Models.

- The Run Name text box specifies the name for a particular run of the symbolic state space generator. This field is used to name trace files and output files (see below), and defaults to “Results” when a new symbolic SSG is created. If no run name is given, the symbolic SSG name will be used.

- The Build Type pull-down menu is used to choose whether the generator should be run in Optimize or Normal mode. In normal mode, the model is compiled without compiler optimizations, and you can specify various levels of output by changing the trace level, as explained below. This mode is useful for testing or debugging a model, as a text file named

Run Name

Run Name _Exp

_Exp x

x _trace.txt will be created for each experiment and contain a trace of the generation. In optimize mode, the model is compiled with maximum compiler optimizations, and all trace output is disabled for maximum performance. Running the generator will write over any data from a previous run of the same name, so change the Run Name field if you wish to save output/traces from an earlier run.

_trace.txt will be created for each experiment and contain a trace of the generation. In optimize mode, the model is compiled with maximum compiler optimizations, and all trace output is disabled for maximum performance. Running the generator will write over any data from a previous run of the same name, so change the Run Name field if you wish to save output/traces from an earlier run.

- The Build Type pull-down menu is used to choose whether the generator should be run in Optimize or Normal mode. In normal mode, the model is compiled without compiler optimizations, and you can specify various levels of output by changing the trace level, as explained below. This mode is useful for testing or debugging a model, as a text file named

- The Trace Level pull-down menu is enabled only when Normal mode is selected as the Build Type. This feature is useful for debugging purposes, as it allows you to select the amount of detail the generator will produce in the trace. The two options for Trace Level are:

- Level 0: None The processing time for each phase of the generation is printed out (see the next section for more on generation phases). Moreover, the numbers of unlumped and lumped states are also shown. The ouput is the same as it would be if optimize mode were selected.

- Level 1: Verbose This includes everything printed in Level 0, plus detailed information about the symbolic data structures, such as the number of levels, the size of the nodes in each level, the final number of nodes, the maximum number of nodes during the runtime, and the number of firings of local and global actions.

- The symbolic SSG does not produce a great deal of debugging information; therefore, you will not notice much difference between the execution times of the two different trace levels.

- The numbers entered in the MDD Unique Table Size and MxD Unique Table Size text boxes are used by the MDD and MxD data structures. They are the sizes of the hash tables used to store all the unique nodes of the MDD and MxD data structures, respectively. Their default values are 50,000 and 10,000, and their minimum values are 5,000 and 1,000, respectively.

- There is no clear-cut set of rules on how to set these numbers. The default values should be appropriate for most models. For extremely large models for which the state space generation takes much more than an hour, double or quadruple the default values. Increasing the values above a certain value deteriorates the performance of the SSG algorithm.

- The Enable Compositional Lumping checkbox, when selected, activates the compositional lumping algorithm, which for some models (but not all of them) reduces the size of the CTMC that needs to be solved. Although enabling the algorithm increases the running time of the state space generation, in some cases, it leads to significant reduction in the numerical solution time.

- The Place Comments in Output checkbox, when selected, will put the user-entered comments in the generator output/trace. If the box is not checked, no comments will appear, regardless of whether any have been entered.

- The Edit Comments button allows you to enter your own comments for a run of the generator. Clicking this button brings up the window shown in <xr id="fig:ssg_commentbox" />. Type any helpful comments in the box and hit OK to save or Cancel to discard the comments. The Clear button will clear all the text from the comment box.

Generation

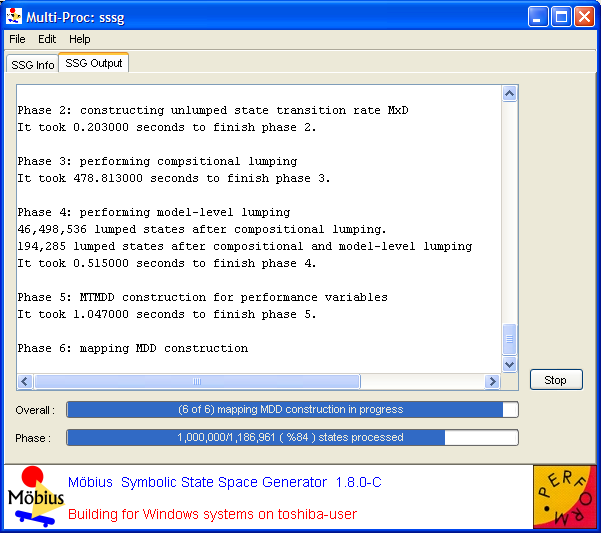

Clicking the Start State Space Generation button switches the view to the SSG Output tab and begins the process of generating the state space. Snapshots of the output window in different phases of the generation appear in <xr id="fig:symssg_output" />.

<figure id="fig:symssg_output">

<subfigure> </subfigure> </subfigure>

|

<subfigure> </subfigure> </subfigure>

|

| (a) Just after starting. | (b) Performing phase 6 (compositional lumping is enabled). |

As for the flat state space generator, the generation begins by compiling the models4 and building the generator. For each active experiment, the values of the global variables are printed.

- 4 Here models refers to the atomic/composed models, performance variable, and study.

The state space is then generated for the experiment in six phases if compositional lumping is enabled, and in five phases otherwise. Two progress bars at the bottom of the dialog show the amount of completed work. The Overall progress bar indicates which phase is in progress and the overall progress of the generation, and the Phase progress bar indicates the progress of each phase of the generation. The amount of time taken to complete each phase is printed after the phase is finished. At any time, you may use the Stop button to terminate the run. A text file named  Run Name

Run Name _output.txt will be created. It will mirror the data output in the GUI.

_output.txt will be created. It will mirror the data output in the GUI.

The 6 phases are:

- The unlumped state space is generated in the form of an MDD data structure in a number of iterations (see Section 5.1.1 of Building Models for definitions of lumped and unlumped state spaces). The total number of iterations depends on the model and is not known in advance. After each iteration is complete, the Phase progress bar shows the iteration number and the number of unlumped states that have been explored so far. When this phase is completed, the total number of explored unlumped states is printed. Phases 1, 3, and 6 are often the most time-consuming phases of the generation process.

- The MxD data structure representing the state transition rate matrix of the unlumped CTMC is built in this phase. This phase is usually completed very quickly.

- In this phase and the following one, lumping algorithms are applied on the state space to reduce the size of the CTMC that needs to be solved to compute the desired performance variables. In this phase, the compositional lumping algorithm is applied on the unlumped state space. The size of the state space lumped by the compositional lumping algorithm is printed out at the end of the step. This phase is performed in two subphases. In the first one, the initial partition for each atomic model is computed based on the values of the performance variables. In the second subphase, which is based on an efficient partition-refinement algorithm[3], the initial partition is refined repeatedly to compute the lumped state space for each atomic model. Virtually for all models, the second subphase is much faster than the first subphase.

- In this phase, the model-level lumping induced by Replicate/Join operators is performed. The size of the state space after compositional and model-level lumping is printed out at the end of this step. This phase usually takes the least amount of time of all the phases.

- For each of the performance variables defined on the model, one MTMDD symbolic data structure is built. This data structure gives the value of that performance variable for each state of the lumped state space.

- The “mapping” MDD, a special type of the MDD data structure, is constructed in this phase[2]. This phase has two subphases. In the first, the set of states of the mapping MDD is computed; during this process, the progress bars are not updated because the cardinality of the set is not known yet. In the second subphase, the mapping MDD construction actually starts. While the mapping MDD is being built, the Phase progress bar shows the number of states of the mapping MDD built so far, the total number of states of the mapping MDD, and the fraction of the phase that has been completed. The Overall progress bar is also updated accordingly.

Command line options

The symbolic state space generator is usually run from the GUI. However, since the models are compiled and linked with the generator libraries to form an executable, the generator can also be run from the command line (e.g., from a system shell/terminal window). The executable is found in the directory

-

Möbius Project Root

Möbius Project Root /

/ Project Name

Project Name /Solver/

/Solver/ Solver Name

Solver Name /

/

-

and is named  Solver Name

Solver Name SymGen_

SymGen_ Arch

Arch [_debug], where Arch is the system architecture (Windows, Linux, or Solaris) and _debug is appended if you build the symbolic state space generator in normal mode. The command line options and their arguments are as follows:

[_debug], where Arch is the system architecture (Windows, Linux, or Solaris) and _debug is appended if you build the symbolic state space generator in normal mode. The command line options and their arguments are as follows:

-P experimentfilepath

- Same as the flat state space generator. For more details, refer to Section 2.2.3.

-N experimentname

- Same as the flat state space generator. For more details, refer to Section 2.2.3.

-B experimentnumber

- Generate the state space for the experiment numbered experimentnumber, with the first experiment having number 0. For example, use “-B1” to run the second experiment (e.g., Experiment_2). Defaults to 0.

-c

- Enables the compositional lumping algorithm, which is disabled by default.

-e, -s db_entity, db_macserver

- These are internal options for interaction with the database. You can safely ignore them.

-l trace_level[0-1]

- Use trace_level as the trace level described above. Only applies when you are running the debug executable.

-t tracefilename

- Create a trace file named tracefilename as output. If this option is not specified, output is directed to the standard output stream.

-d MDD_Utable_size, union_cache_size, local_exploration_cache_size, MxD_Utable_size, submat_cache_size, add_cache_size

- These are the different parameters for the symbolic data structures. They should follow the -d option in the form of a comma-separated list of non-negative numbers. The numbers in parentheses are the default value and the minimum value enforced by the symbolic SSG.

- MDD_Utable_size The size of the unique table of the MDD (50,000 and 1,000)

- union_cache_size The cache size of the union operation of the MDD (10,000 and 500)

- local_exploration_cache_size The cache size of the local state space exploration operation of the MDD (10,000 and 500)

- MxD_Utable_size The size of the unique table of the MD (10,000 and 500)

- submat_cache_size The cache size of the submatrix operation of the MD (5,000 and 100)

- add_cache_size The cache size of the add operation of the MD (5,000 and 100)

- This command line option is optional. If it is not given, the default values will be used. If the option is present and the value for a parameter is zero or not given (i.e., there is no character between two consecutive commas) the default value for that parameter is used.

Example: sssgSymGen_Linux_debug -P. -B0 -l1 -ttrace.txt

-d 50000,,10000,0,5000,5000

-

Solver Name

Solver Name is sssg

is sssg

-

-

Arch

Arch is Linux

is Linux

-

This command will generate the state space for the first experiment (Experiment_1) with trace level 1, place the experiment files in the current directory, and create a trace file named trace.txt. All parameters for the symbolic data structures except union_cache_size and MD_Utable_size are given by the user via the -d option. For those two parameters the default values are used.

Simulator

The simulator can be used to solve models using discrete event simulation. It takes the model and parameters specified by a study, links the libraries for those models together with the simulator library, and runs the executable. The simulator can be used to solve any model specified in Möbius, and can be used to solve for transient and steady state results.

Simulation Parameters

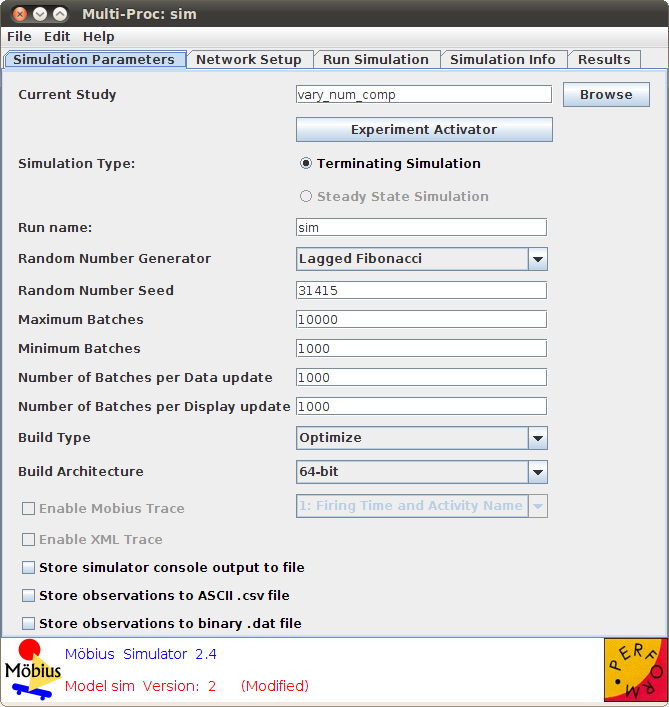

The Simulation Parameters tab on the Simulator editor allows you to specify all of the simulation parameters and is shown in <xr id="fig:sim_parameters" />.

<figure id="fig:sim_parameters">

The parameters on the page can be roughly broken down into four categories that proceed down the tab.

- Study and experiment selection

- Simulation execution parameters

- Compilation options

- Checkboxes

Study and experiment selection

The top of the tab starts with the Current Study field, which contains the name of the study to be solved. The study is initially set when you create the simulation, and you are prompted to specify the solver child. You can change the study by typing a new study name into the field, or by clicking the Browse button. If you click on the Browse button, the Select Solver Child window will pop up. It shows all of the studies and prompts you to select one.

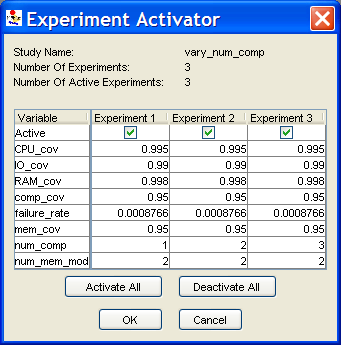

The Experiment Activator button opens a pop-up window (shown in <xr id="fig:sim_experiment" />) to allow you to view and select the experiments in the study. Recall that all global variable values are assigned in the study, and that each unique set of assignments is called an experiment. The pop-up window shows all of the experiments in the study, the global variable values for each experiment, and the experiments that have been activated in this simulation so that they will be solved. You can activate or deactivate an experiment by checking the box above the experiment. You can activate or deactivate all experiments by clicking on the Activate All or Deactivate All buttons.

<figure id="fig:sim_experiment">

Simulation execution parameters

The next set of parameters affect the execution of the simulation.

- The radio boxes under Simulation Type specify whether the model solution should be a steady state solution or a terminating solution. If the model contains transient rewards, the Terminating Simulation option will be enabled, and if the model contains steady state rewards, the Steady State Simulation option will be enabled. If all the rewards are either transient or steady state, only one option will be enabled, and it will be selected by default.

- The pull-down menu Random Number Generator is used to select the pseudo-random number generator to use in the solution. The default option is the Lagged Fibonacci random number generator, but the Tauseworthe random number generator can be used.

- The Random Number Seed field specifies the seed for the pseudo-random number generator. The default value is 31,415, but any value can be used. Once the seed has been specified, the sequence of numbers is deterministic. Multiple simulations with the same random number seed should generate the same results5.

- The Maximum Batches field specifies the maximum number of batches (in steady state simulation) or replicas (in transient simulation) that the simulator will run. The simulator will run several batches or replicas to generate data for the confidence intervals. The simulator will continue to run more batches or replicas until all of the results have converged to their specified confidence intervals. This option will terminate the simulation if the simulator has run the maximum number of batches, even if not all of the reward variables have converged to their specified confidence intervals.

- The Minimum Batches field specifies the minimum number of batches (in steady state simulation) or replicas (in transient simulation) that the simulator will run. The simulator will run several batches or replicas to generate data for the confidence intervals. The simulator will continue to run more batches or replicas until all of the results have converged to their specified confidence intervals. This option ensures that the simulator will run at least the minimum number of batches or replicas before finishing. This is useful in simulating rare events, for which the model’s reward variables may appear to converge too early if no rare events have occurred.

- The Number of Batches per Data update field specifies how many batches or replicas the simulation executables should complete before sending those results back to the editor. If the simulation is run on multiple machines, each machine will send an update after simulating the specified number of batches or replicas.

- The Number of Batches per Display update field specifies how many batches or replicas should be received by the editor before it updates the user interface. A low number will provide the most up-to-date data, but can cause the user interface to use too many resources. A larger number will reduce the load created by the user interface, but will also provide fewer updates. In general, this number should always be greater than or equal to the number specified in Number of Batches per update.

5 The simulator is multi-threaded and can be distributed. Due to timing variations in a distributed environment, the results will not necessarily be identical when an experiment is simulated multiple times with the same random number seed, even though the random number sequences will be identical.

Compilation options

The next set of options control how the simulation is compiled and run, allowing for optimizations, trace output, and specification of the run name.

- The Build Type pull-down menu allows you to set the model to run in either optimized or normal mode. In normal mode, the model is compiled without compiler optimizations, and it is possible to specify various levels of output by changing the trace level. In optimized mode, the model is compiled with compiler optimizations, and all trace output is disabled for maximum performance. You would normally use normal mode for testing a model and making sure it is behaving properly, while you would use optimized mode for production simulations.

- The Build Architecture pull-down menu allows you to set the model to execute in either 32-bit or 64-bit mode. On 32-bit machines (or machines running a 32-bit operating system), this drop down menu will be disabled and only 32-bit execution is available.

- The Trace Level pull-down menu allows you to set the level of detail included in a trace of the simulation. The trace includes key information about the evolution of the simulation in a text file, which can be used to debug a model. The available trace levels are:

- – 0: No Output: No trace file is generated.

- – 1: Firing Time and Activity Name: This option will include the simulation time of every activity completion, and the name of the activity that fired.

- – 2: Level 1 plus Minimal State: This level adds some relevant state information from the time of the activity firing to the previous level’s activity firing times and names. Specifically, it shows the values of state variables that are either part of the enabling conditions for the activity, or part of the state affected by the activity.

- – 3: Level 1 plus All State: This level prints out all of the state information at every activity firing, in addition to the name of the activity and the time it fires.

- – 4: All: This level prints out all of the information from the previous levels plus more data on which activities are enabled, become enabled, or become disabled when an activity fires.

- The trace-level options can generate useful information that you can use to understand the behavior of your models. However, it can also generate more information that you want. Generally, trace output is only used with a small number of batches.

- The Run name field specifies the name for this run of the simulation. This is used to name trace files and output files. Change the run name any time you want to save existing results from a previous run of the simulator. Otherwise, the new results will overwrite any existing results.

Checkboxes

The last set of options are set using checkboxes. Most of them control the output of the simulator, while one of them controls the variance calculation.

- Store simulator console output to file stores all of the output from the editor display to a file.

- The Store observations to ASCII .csv file option can be used to save the raw simulation results to a file for later use. Again, this option is useful if you are running remote simulations over a low-bandwidth connection, because you can access the observations at a later time to get the results of the simulation. The option stores the observations in an ASCII file format. ASCII is human-readable, and can also be loaded into tools like Excel for custom analysis.

- The Store observations to binary .dat file option can be used to save the raw simulation results to a file for later use. This option is useful if you are running remote simulations over a low-bandwidth connection. You can access the observations at a later time to get the results of the simulation. The option stores the observations in a binary file, which should be smaller than the ASCII file the other option would have produced. The binary files are used by Möbius for off-line processing (see Section 3.3.2).

- There used to be a checkbox in the Simulation Parameters tab called Use Jackknife Variance Calculation that made the simulator use the advanced jackknife method of variance calculation. In Möbius 1.8.0 and later, the method is always used; therefore, the checkbox has been removed.

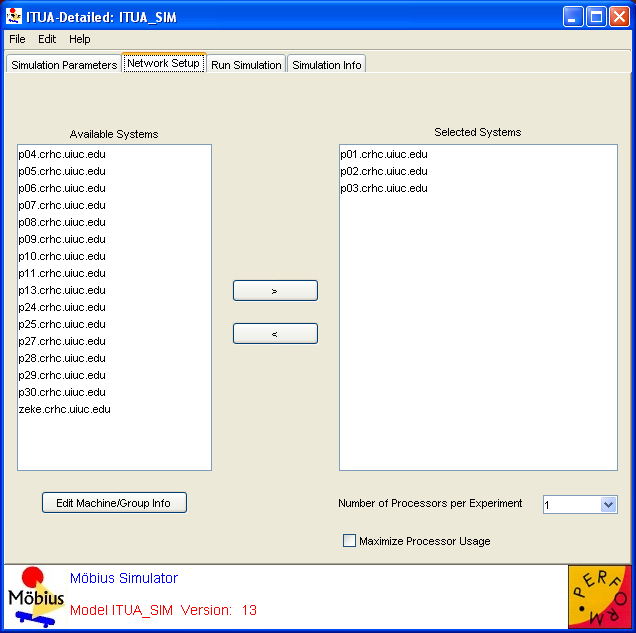

Network Setup

Use the Network Setup tab, shown in <xr id="fig:sim_network" />, to select the computers on which the simulation will be run. You can parallelize simulations by executing multiple replicas of the simulation on different machines at the same time, with near linear speed-up in solution time. On the left of the tab is the Available Systems list of machines or groups of machines that can be used in the solution of the model. You can select one or more machines for use by selecting their names in the list, and then clicking on the >> button. Clicking on the button will transfer all of the selected machines to the Selected Systems list. You can deselect machines in the same manner, by selecting their names in the Selected Systems list and clicking on the << button.

<figure id="fig:sim_network">

You can edit the list of available systems by clicking on the Edit Machine/Group Info button. Clicking on the button opens the Network Machine and Group Settings dialog, which is described in Section ??.

The Number of Processors per Experiment drop-down menu allows you to select the number of processors to use per experiment. The simulator can assign multiple machines to simulate the same experiment, and each experiment will be assigned a number of machines no greater than the specified number. Machines will be assigned to the lowest-numbered experiment that has not yet been solved and that has fewer than the specified number of machines.

The Maximize Processor Usage checkbox overrides the Number of Processors per Experiment setting. If the Maximize Processor Usage option is enabled, the simulator will distribute experiments to all the machines, such that each experiment is running on a similar number of machines. When an experiment finishes, the machines that were assigned to it are then reassigned to the other experiments. In that way, all of the experiments are solved as quickly as possible.

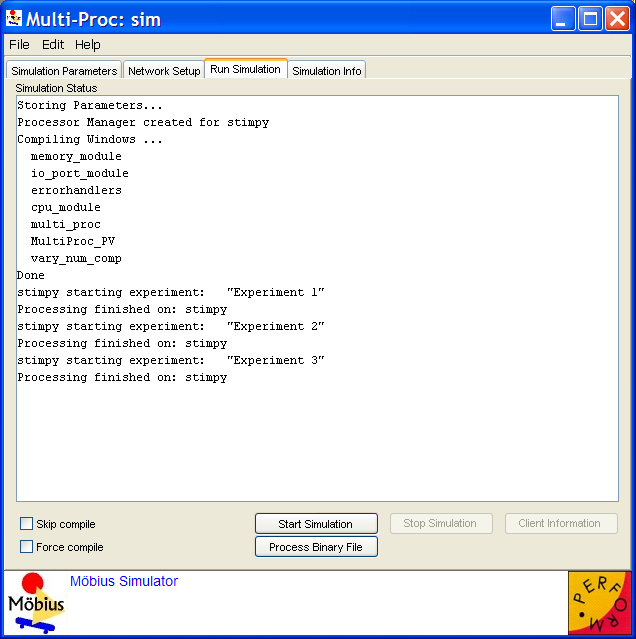

Running Simulation

The Run Simulation tab, shown in <xr id="fig:sim_run" />, is used to start and stop the simulator. As the simulation is being compiled and run, messages are produced and are shown in the Simulation Status area. The information includes the name of all the files being compiled and linked, and indicates when the execution of the simulation begins.

<figure id="fig:sim_run">

On the bottom of the tab are a couple of check boxes and a series of buttons to control the running of the simulation. The checkboxes Skip compile and Force compile control whether the underlying files are compiled before starting the simulations. By default, the simulator editor will recompile the minimum set of files before starting the simulations for the first time if neither checkbox is checked.

There are two modes for generating simulator results. The first mode is on-line, in which the simulation executables are run and the results are computed and displayed by the editor as the solution converges. The second mode is off-line. There are two steps in off-line simulation: the first step is the launch of the simulation executables with the binary output file option enabled, and the second step is the processing of the binary output files when the simulator processes have completed. The two modes are discussed in the following sections.

On-line simulation

The Start Simulation button starts the simulator. Any files that should be recompiled are recompiled, and experiments are assigned to the selected machines.

The Stop Simulation button will stop a running simulation. It will stop all of the simulations started on remote machines, and finish any remaining result computation.

The Client Information dialog, shown in <xr id="fig:sim_clientinfo" />, is used to check the status of a client machine during solution. The Client Information window will pop up with a list of client machines used in this simulation. When you double-click on any of the system names, the Client Status window, shown in <xr id="fig:sim_clientstatus" />, will pop up to provide the name of the system, the architectures, and the operating system.

<figure id="fig:sim_clientinfo">

<figure id="fig:sim_clientstatus">

Off-line simulation

The Process Binary File button allows you to process a binary output file that was generated if you checked the Store observations to binary .dat file option, as described in Section 3.1.4. When you press the button, a file browser will open to allow you to select the appropriate binary data output file(s). Once you have selected the files, Möbius reads them and processes the data as if the simulation was running on-line, and displays the results after all data has been read.

Binary data files can be generated through multiple simulation runs. You can launch runs with the Möbius simulation editor or launch the simulation executable directly via the command line.

One reason to use the off-line processing capability is to generate solutions without having to run the Möbius graphical interface. You can launch jobs on the command line (directly or via user-constructed command line scripts), collect the results, and transfer them to a machine with graphical access on which the data files can be processed to compute the results.

Another reason to use off-line processing is to generate more simulation replications than could be generated in one session. Repeated executions of the simulator (using a unique random seed and run name each time) will produce multiple sets of data files. These files can be processed together at one time to generate results based on all the runs.

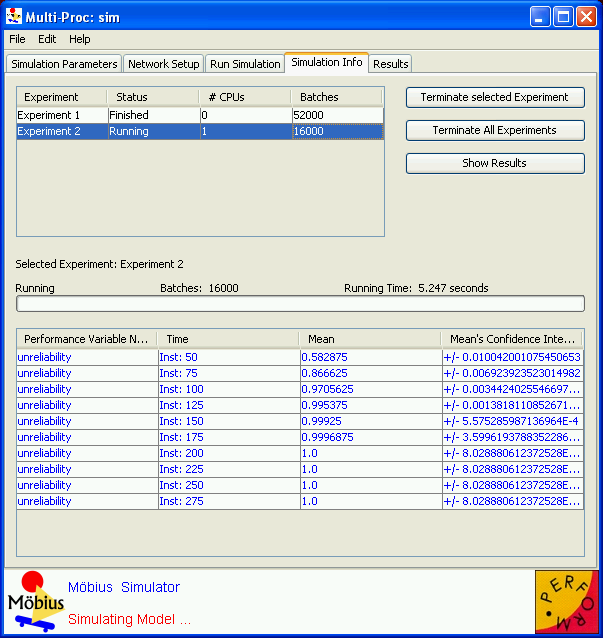

Simulation Info

The Simulation Info tab displays the results for the experiments as they become available, and is shown in <xr id="fig:sim_results" />. At the top left of the tab is the list of all the enabled experiments and their statuses. The Status column indicates whether the experiment has not started, is running, or has completed. The # CPUs column indicates how many processors are currently assigned to the given experiment, and the Batches column indicates how many batches or replicas of that experiment have completed and been reported back to the user interface. You can select an experiment by clicking on its row in the table. Selecting the experiment in the list updates the Selected Experiment display in the lower half of the window. The Selected Experiment display shows the simulation progress by reporting the number of replications computed and showing the mean and variance values and confidence intervals for each reward variable. When the result converges so that the computed confidence interval is within the tolerances specified in the Simulation panel of the Performance Variable Editor (see Section 6.1.6 of Building Models), the color of the reward variable row changes from red to blue.

<figure id="fig:sim_results">

There are three buttons to the right of the experiment list:

- The Terminate Selected Experiment button stops the solution of the currently selected experiment. All machines that are simulating that experiment stop doing so, and are reassigned.

- The Terminate All Experiments button stops the solution of all the experiments. The simulator receives the final updates and displays the final results.

- The Show Results button opens the Simulation Results window, which is discussed in the next section.

Simulation Results

The results of the simulation are displayed in the Simulation Results tab when the simulation finishes or the user clicks on the Show Results button of the Simulation Info tab. The window displays the human-readable text output created by the simulator. The file includes all of the parameters for the model, all of the experiments, and all of the results. In addition to the human-readable output, a comma-separated-value file (.csv) is also created. The csv file is designed to be simple to import into third-party plotting and analysis tools (such as Excel).

Command Line Options

The simulator is normally run from the simulator editor. However, it can also be run from the command line. The simulator executable resides in the project tree, in a path following this pattern:  Möbius Project Root

Möbius Project Root /

/ Project Name

Project Name /Solver/

/Solver/ Solver Name

Solver Name /. It can be run with the command line options listed in <xr id="tab:command_options" />. These options will be shown if you execute the simulator on the command line, without any options.

/. It can be run with the command line options listed in <xr id="tab:command_options" />. These options will be shown if you execute the simulator on the command line, without any options.

<figtable id="tab:command_options">

| Option | Description |

|---|---|

| -a <name> | Write ASCII output to file <name>. |

| -b <name> | Write binary output to file <name>. |

| -c <num> | Client identification number (default = 0). |

| -e <num> | Experiment to run, “Experiment 1” is num=0 (default = 0). |

| -f <name> | Trace file name (default=<stdout>). |

| -l <num> | Trace level (0-off, 1,2,3,4 [default = 0]). |

| -n <num> | Num observations sent in 1 update to simulator editor (default = 1000). |

| -N <num> | Maximum number of batches to run (default = infinite). |

| -p <num> | Communication port of network server (default = 10000). |

| -r <num> | Random number generator

(0 = lagged Fibonacci [default], 1 = Tauseworthe). |

| -s <num> | Random number seed (31,415 [default]). |

| -t <num> | Simulation type (1 = terminating [default], 0 = steady state). |

| -w | Simulator will wait to send output until the socket connection has been established. Used when the simulator is launched by the Möbius editor. |

Numerical Solvers

This section discusses the numerical (or analytic) solvers that are supported in Möbius. A number of solvers are available for computing solutions to various types of performability measures. This chapter begins by explaining how a new solver can be created. It then gives guidelines for choosing the appropriate solver for a given model class and measures of interest. Finally, it discusses in detail all of the available solvers and their relevant parameters. The discussion of each solver includes tips on pitfalls to avoid and hints for effective use of the solver.

Creating a New Numerical Solver

All of the solvers presented in this chapter compute their solutions by analyzing the underlying state space of a model. The state space is generated using the state space generator, as described in Section 2.2. You can create a new numerical solver by selecting Numerical on the Project panel and clicking the button New. A dialog window will appear, requesting a name and the type of solver to use. After you have entered the name and chosen the type of solver, a dialog appears, requesting the name of the state space to be analyzed. You can choose the desired state space by clicking on one of the choices presented in the dialog window Select Numerical Child. After you select the state space, the main editor for the numerical solver appears. You use the editor to enter the relevant parameters for your solver. The parameters corresponding to each solver are explained in the sections below. After a solver has been created, you can open it for revision by simply double-clicking on its name or by selecting its name and clicking the Open button on the Project panel.

Choosing the Appropriate Numerical Solver

The choice of which numerical solver to use depends on the type of a solvable model and the type of performability measures of interest. The type of a solvable model is determined by the distributions of its actions (see Section 1.2.5 of Modeling Background for an explanation of the numerous distributions supported by Möbius). A solvable model may be one of two types:

- Markov Model: All timed actions in models of this type are exponentially distributed.

- Deterministic Model: Timed actions in models of this type may be a mixture of exponential and deterministic distributions. However, there can be no more than one enabled deterministic action at any time, and the time of the deterministic activity cannot depend on the marking of the model.

A performability measure has three defining attributes. These attributes, together with the model type, determine the appropriate solver to use. They are as followings:

- Whether the performability measure is obtained in transient or steady state;

- Whether the performability measure is measured at an instant of time or over an interval of time;

- Whether the mean, variance, or both are desired.

The selection of an appropriate solver based on these attributes and model type is summarized in <xr id="table:solverSelection" />. In addition, a list of advantages and disadvantages in using the numerical solvers is provided below. You should consider these issues in order to make effective use of the solvers.

<figtable id="table:solverSelection">

| NUMERICAL SOLVERS (FOR REWARD VARIABLES ONLY) | ||||

| Model Class | Steady-state

or Transient |

Instant-of-time

or Interval-of-time |

Mean,

Variance, or Both |

Applicable

Analytic Solver |

|---|---|---|---|---|

| All actions

exponential |

Steady-state | Instant-of-timea | Both | dss b, iss c, and tss d |

| Transient | Instant-of-time | Both | trs e and atrs f | |

| Interval-of-time | Mean | ars g | ||

| Exponential and

deterministic actions |

Steady-state | Instant-of-timeh | Both | diss i and adiss j |

| a if only rate rewards are used, the time-averaged interval-of-time steady-state measure is identical to the instant-of-time steady-state measure (if both exist). |

b dss  Direct Steady-State Solver Direct Steady-State Solver

|

c iss  Iterative Steady-State Solver Iterative Steady-State Solver

|

d tss  Takahashi Steady-State Solver Takahashi Steady-State Solver

|

e trs  Transient Solver Transient Solver

|

f atrs  Adaptive Transient Solver Adaptive Transient Solver

|

g ars  Accumulated Reward Solver Accumulated Reward Solver

|

| h provided that the instant-of-time steady-state distribution is well-defined. Otherwise, the time-averaged interval-of-time steady-state variable is computed, and only results for rate rewards should be derived. |

i diss  Deterministic Iterative Steady-State Solver Deterministic Iterative Steady-State Solver

|

j adiss  Advanced Deterministic Iterative Steady-State Solver Advanced Deterministic Iterative Steady-State Solver

|

Advantages of analytic solution

- Exact computation of solution is carried out, as opposed to simulation, in which the solution is estimated along with a confidence interval indicating how likely it is that the estimated solution is the solution of the exact computation.

- For the instant-of-time performability variables, distributions can be obtained without extra cost beyond that of obtaining their mean and variance.

- Accuracy of the solution can, for most solvers, be increased without excessive increase in the computation time (within the limitations stemming from machine accuracy).

Disadvantages of analytic solution

- Analytic solvers are not available for all models. The models must belong to one of the two model classes discussed above.

- The state space size of the generated model must be finite. Moreover, it cannot be too large relative to the memory of the computer being used. The iterative solvers iss, trs, and ars can usually deal with models having several million states. The other solvers demand additional memory on top of that needed for the storage of the transition matrix. See the discussion in the sections specific to the different solvers for more details.

- It is a challenge to create models from which all the desired performability results can be derived, but which have a state space small enough to allow for analytic solution. Considerable payoffs can be expected from exploring state space reduction approaches. In that respect, the use of the Rep construct in the composed model can be very helpful.

- Analytic solution is time-consuming if one is dealing with stiff models. A prominent class of stiff models is the one with a wide range of expected action completion times. An example is a dependability model in which there are long periods until component failures and relatively fast repairs.

Additional constraints

In addition to the constraints already imposed on the two model classes discussed above, analytic solvers of either class cannot be used to solve PEPA models which contain guard conditions.

Detailed Descriptions of Supported Numerical Solvers

The technical details of the supported numerical solvers are described below. Details are given about how their required parameters are entered into Möbius and how their output should be interpreted. The discussion includes hints on pitfalls to avoid and ways to make effective use of the solvers.

Common options among all analytic solvers

The following options are common to all the solvers:

- Accuracy is an integer indicating the degree of accuracy that the user wishes in terms of the number of digits to the right of the decimal point. Usually, the default is nine digits. If the number of digits specified exceeds the machine accuracy, then the machine accuracy is used. Note that the precise meaning of the accuracy setting depends on the solver. The precise meaning of Accuracy will be stated in the below discussion of the individual solvers.

- The State Space Name specifies the generated state space to use for computing numerical solutions. You may choose among the available state spaces by pressing the button “...” to the right of the text field next to State Space Name.

- If an Output File Name is given as (for example) “outfile”, the results are written into the file outfile_Experiment_i.txt, where

is the experiment number. This file is put in the solver’s results directory, MobiusProject/projectname/Result/solvername. If no output file is given, the output goes to the control panel window.

is the experiment number. This file is put in the solver’s results directory, MobiusProject/projectname/Result/solvername. If no output file is given, the output goes to the control panel window.

- If an Output File Name is given as (for example) “outfile”, the results are written into the file outfile_Experiment_i.txt, where

- If a Debug File Name is given as (for example) “debugfile”, debug information is written into the file debugfile_Experiment_i_debug.txt, where

is the experiment number. This file is put in the solver’s results directory, MobiusProject/projectname/Result/solvername. If no debug file is given, no debug information is generated. The debug information consists essentially of detailed information regarding the solver. It can be useful for determining whether a solution converges, but usually the same information can be obtained more naturally by setting the Verbosity. The Verbosity option will be discussed with respect to each individual solver.

is the experiment number. This file is put in the solver’s results directory, MobiusProject/projectname/Result/solvername. If no debug file is given, no debug information is generated. The debug information consists essentially of detailed information regarding the solver. It can be useful for determining whether a solution converges, but usually the same information can be obtained more naturally by setting the Verbosity. The Verbosity option will be discussed with respect to each individual solver.

- If a Debug File Name is given as (for example) “debugfile”, debug information is written into the file debugfile_Experiment_i_debug.txt, where

- Plot Complementary Distribution is not currently supported by Möbius.

- If Run in the Background is selected, the solver process is run in the background so that other control panel options can still be used while the solver is running. If no output file is specified, output automatically goes to the control panel window.

The output file of each solver contains various information. It first itemizes the options that were used, including defaults, and it contains the results of the solution process. It also records the following information:

- The project, study, and experiment for which the results were computed.

- The Global variable settings, which are the values assigned to all the global variables in the chosen experiment.

- The Number of states in process, which is the number of states that were generated by the state-space generator.

- The Number of non-zero elements, which is the number of non-zero elements in the transition matrix.

- The Computation Time, which is the total execution time, equal to the sum of user time and system time.

- The User Time, which is the total amount of time spent executing in user mode.

- The System Time, which is the total amount of time spent executing in system mode.

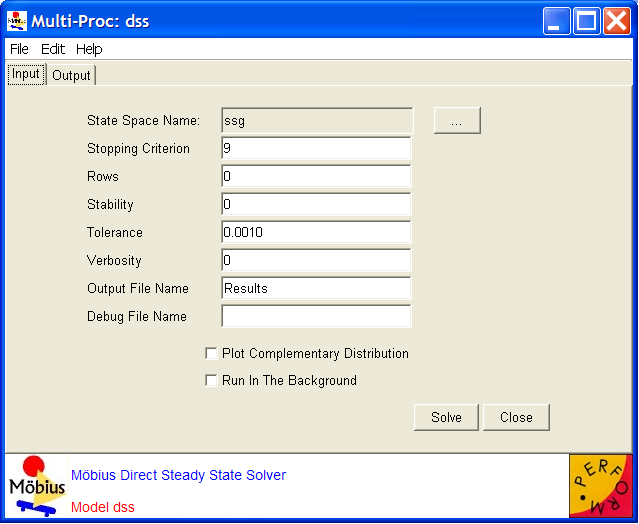

Direct steady-state solver

The direct steady-state solver (dss) solves for instant-of-time variables with  , using a numerically stable version of LU decomposition[4]. It does so by solving the system of equations given by

, using a numerically stable version of LU decomposition[4]. It does so by solving the system of equations given by  , with the additional constraint that the sum of all elements in the vector

, with the additional constraint that the sum of all elements in the vector  must equal one.

must equal one.  is the state occupancy probability vector, and

is the state occupancy probability vector, and  is the generator matrix. The generator matrix is obtained from the SAN through use of the state space generator (see Section 2.2). The solver uses two methods to reduce the fill-ins (non-zero elements) of the matrix during solution: (1) the improved generalized Markowitz strategy, which selects a next pivot element based on a heuristic that can reduce fill-in, and (2) a technique that sets elements below some threshold (which is tunable, see the options below) to zero during the execution of the solution algorithm[4]. If the problem is not too large, the solver then uses iterative refinement to reach a correct final solution. The solver calculates the mean and variance for each performability variable. The means and variances are recorded in textual form in the output file. <xr id="fig:dssSolver" /> shows the editor for the direct steady-state solver and its available options and adjustable parameters.

is the generator matrix. The generator matrix is obtained from the SAN through use of the state space generator (see Section 2.2). The solver uses two methods to reduce the fill-ins (non-zero elements) of the matrix during solution: (1) the improved generalized Markowitz strategy, which selects a next pivot element based on a heuristic that can reduce fill-in, and (2) a technique that sets elements below some threshold (which is tunable, see the options below) to zero during the execution of the solution algorithm[4]. If the problem is not too large, the solver then uses iterative refinement to reach a correct final solution. The solver calculates the mean and variance for each performability variable. The means and variances are recorded in textual form in the output file. <xr id="fig:dssSolver" /> shows the editor for the direct steady-state solver and its available options and adjustable parameters.

<figure id="fig:dssSolver">

The options are as follows:

- The Rows is an integer representing the number of rows to search for a pivot. Zero is the default, meaning pivoting is turned off by default. A value of 2 or 3 is recommended[4].

- The Stability is a short integer representing the “grace” factor by which elements may become candidates for pivots. 0 is the default, meaning pivoting is turned off. A stability factor between 4 and 16 is recommended in the literature; see [4], for example.

- The Tolerance is a double that, when multiplied by the smallest matrix element, is the threshold at which elements will be dropped in the LU decomposition. 0.0 is the default, which implies that no dropping takes place. In general, it is recommended[4] that the drop tolerance be chosen to be two to five orders of magnitude smaller than the smallest matrix element, i.e., choose the Tolerance between 10-2 and 10-5.

- Verbosity (

) sets the trace level for intermediate output. The default is no intermediate output. If

) sets the trace level for intermediate output. The default is no intermediate output. If  , then the message “completed column number n” is printed after every

, then the message “completed column number n” is printed after every  iterations during the LU decomposition computation, forward substitution, backward substitution, and iterative refinement.

iterations during the LU decomposition computation, forward substitution, backward substitution, and iterative refinement.

- Verbosity (

The output file contains the means and variances of the performability variables. It also records the following information:

- whether iterative refinement was used,

- the drop tolerance,

- the number of non-zero elements in the original matrix,

- the number of non-zero elements in the factorized matrix,

- if iterative refinement was used, the maximum difference between the cells in the

and zero vectors; if iterative refinement was not used, the relative error,

and zero vectors; if iterative refinement was not used, the relative error,

- if iterative refinement was used, the maximum difference between the cells in the

- the number of correct decimal digits in the state probabilities,

- the number of zeros in the factorized matrix,

- the number of elements dropped,

- the number of new pivots selected.

Pitfalls and Hints

- dss can be used if the steady-state distribution of the Markov model consists of a single class of recurrent non-null states. For instance, dss cannot be applied to a model with multiple absorbing states. If it is, the message “invmnorm: zero diagonal element” will appear and the performance variable will take the NaN (Not a Number) value. To find out whether the model has absorbing states, apply the Flag Absorbing States option in the state space generator.

- dss is effective when relatively small models are considered, because in the process of computing the steady-state probabilities, the original transition matrix is transformed into a matrix with many non-zero elements. Sparse matrix methods, which use the fact that elements equal to 0 do not have to be stored, can then no longer be profitably applied. This is known as the fill-in problem. Especially when large models are considered, fill-ins can become a serious bottleneck, because the order of non-zero elements is, in general, quadratic in the size of the state space. Consequently, dss cannot be used to solve models having state spaces that are larger than several thousand states. Note that the setting of the drop tolerance may be used to partially overcome fill-ins of the matrix.

- The CPU time required by dss also increases in the number of states (with a power of 3). The iterative solver iss often becomes faster than the direct solver dss when the state space size increases.

Iterative steady-state solver

The iterative steady-state solver (iss) solves for instant-of-time variables with  . Two iterative methods are provided in Möbius for this solver: successive over-relaxation (SOR) and Jacobi. iss solves the system of equations given by

. Two iterative methods are provided in Möbius for this solver: successive over-relaxation (SOR) and Jacobi. iss solves the system of equations given by  , where

, where  is the state occupancy probability vector and

is the state occupancy probability vector and  is the generator matrix. The algorithm guesses

is the generator matrix. The algorithm guesses  , calculates

, calculates  , and then comes up with a new guess related to the difference between the answer and the zero vector. It continues until the maximum difference between the cells in the two vectors is within the error bounds.

, and then comes up with a new guess related to the difference between the answer and the zero vector. It continues until the maximum difference between the cells in the two vectors is within the error bounds.

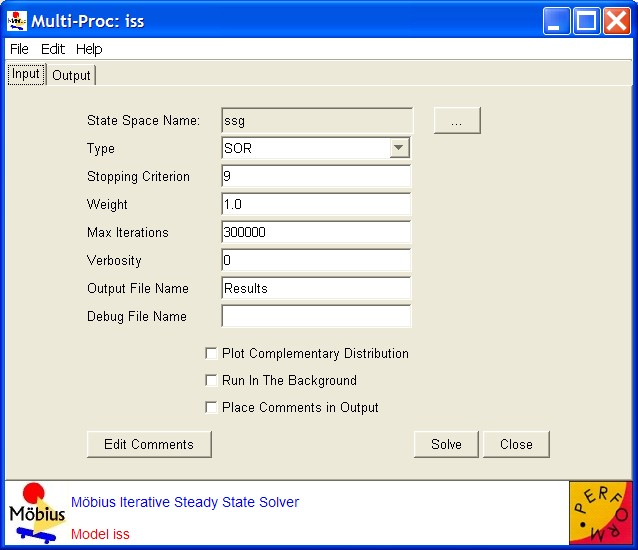

The initial guess for  is equal probabilities for all states. The acceleration factor to be used must be selected by the user. Because of its more modest space requirements, this solver can be applied to larger models than dss can, but it is not guaranteed to converge for all state spaces and initial conditions. It calculates the mean and variance for each performability variable. The means and variances are recorded in textual format in an output file. <xr id="fig:issSolver" /> shows the editor for the iterative steady-state solver and its available options and adjustable parameters.

is equal probabilities for all states. The acceleration factor to be used must be selected by the user. Because of its more modest space requirements, this solver can be applied to larger models than dss can, but it is not guaranteed to converge for all state spaces and initial conditions. It calculates the mean and variance for each performability variable. The means and variances are recorded in textual format in an output file. <xr id="fig:issSolver" /> shows the editor for the iterative steady-state solver and its available options and adjustable parameters.

<figure id="fig:issSolver">

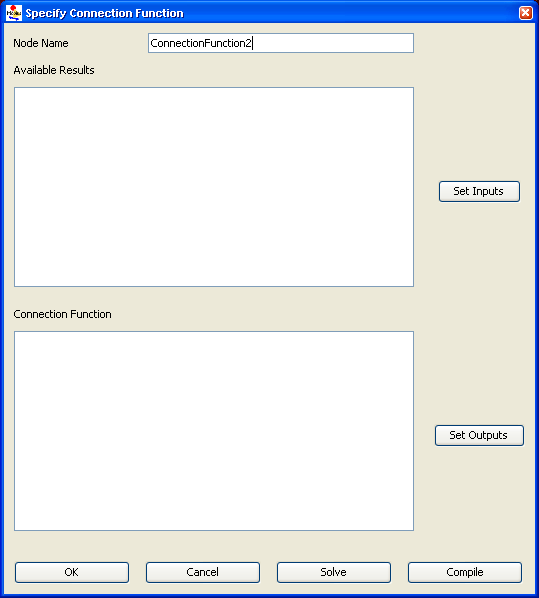

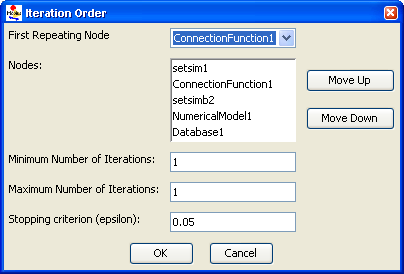

The options are as follows: